FER+

FER+ 注释为标准的 Emotion FER 数据集提供了一组新的标签。在 FER+ 中,每张图片都有10个众包标签,这比原始的FER标签提供了更好的静态图像情感的真相。每个图像有10个标记,研究人员就可以估计每张脸的情绪概率分布。这允许构建产生统计分布或多标签输出的算法,而不是传统的单标签输出,如下所述:https://arxiv.org/abs/1608.01041

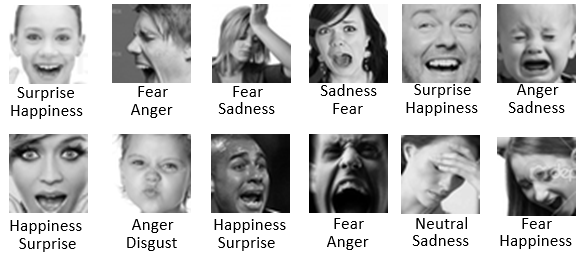

下面是从上述论文中提取的 FER 和 FER+ 标签的一些例子(FER top,FER + bottom):

新的标签文件名为 fer2013new.csv,它包含与原始 fer2013.csv 标签文件具有相同顺序的相同行数,以便您可以推断哪个情感标签属于哪个图像。由于我们无法托管实际的图像内容,请在这里找到原始的 FER 数据集:https://www.kaggle.com/c/challenges-in-representat...

CSV文件的格式如下:使用,中性,幸福,惊喜,悲伤,愤怒,厌恶,恐惧,轻蔑,未知,NF。列“用法”与原始 FER 标签相同,以区分训练、公共测试和私人测试集。其他列是每个情感的投票数加上未知和 NF(不是一个面孔)。

训练

我们还为 https://arxiv.org/abs/1608.01041 中描述的所有训练模式(多数,概率,交叉熵和多标签)提供了一个训练代码。训练代码使用 MS 认知工具包(以前称为CNTK):https://github.com/Microsoft/CNTK。

在安装 Cognitive Toolkit 并下载数据集(我们将在下面讨论数据集布局)之后,您可以简单地运行以下操作来开始训练:

对于多数投票模式

python train.py -d <dataset base folder> -m majority

对于概率模式

python train.py -d <dataset base folder> -m probability

对于交叉熵模式

python train.py -d <dataset base folder> -m crossentropy

对于多目标模式

python train.py -d <dataset base folder> -m multi_target

FER+ 训练布局

有一个名为 data 的文件夹具有以下布局:

/data

/FER2013Test

label.csv

/FER2013Train

label.csv

/FER2013Valid

label.csv

label.csv 每个文件夹中的 label.csv 包含每个图像的实际标签,图像名称采用以下格式:ferXXXXXXXX.png,其中XXXXXXXX是原始FER csv文件的行索引。所以这里的头几个图像的名字:

fer0000000.png fer0000001.png fer0000002.png fer0000003.png

该文件夹不包含实际的图像,你需要从https://www.kaggle.com/c/challenges-in-representat...下载,然后提取所有与“训练”对应的图像进入 FER2013Train 文件夹,对应 “PublicTest” 的所有图像进入 FER2013Valid 文件夹,对应 “PrivateTest” 的所有图像进入 FER2013Test 文件夹。或者你可以使用generate_training_data.py 脚本来完成上述所有的操作,就像下一节提到的那样。

训练数据

我们在 python 中提供了一个简单的脚本 generate_training_data.py,它将fer2013.csv 和 fer2013new.csv 作为输入,合并这两个 CSV 文件,并将所有图像导出为一个png文件供教练员处理。

python generate_training_data.py -d <dataset base folder> -fer <fer2013.csv path> -ferplus <fer2013new.csv path>

引文

@inproceedings{BarsoumICMI2016,

title={Training Deep Networks for Facial Expression Recognition with Crowd-Sourced Label Distribution},

author={Barsoum, Emad and Zhang, Cha and Canton Ferrer, Cristian and Zhang, Zhengyou},

booktitle={ACM International Conference on Multimodal Interaction (ICMI)},

year={2016}

}